SOCIAL SENSORY ARCHITECTURES

Social Sensory Architectures is an on-going research project led by Sean Ahlquist at the University of Michigan to design technology-embedded multi-sensory environments for children with autism spectrum disorder. The research involves the development of therapies which utilize the reinforcing capabilities of a multi-sensory experience for skill-building tasks related to fine/gross motor control and social interaction. Through the use of advanced textile design, sensing technology and bespoke software, complex textile landscapes are transformed into physically, visually and sonically interactive environments. The research was spurred initially by Ahlquist's observations of his daughter Ara, who has autism along with specific issues such as non-verbal communication, sensory-seeking and hypotonia.

The research integrates the fields of architecture, structural engineering, computer vision, human-computer interaction, psychiatry and kinesiology. Initial development took place, through a Research Through Making seed-funding grant from the University of Michigan - College of Architecture and Urban Planning, involving collaborators from the University of Michigan Departments of Electrical Engineering and Computer Science and the School of Music. Currently, the research involves collaboration with Costanza Colombi of the Department of Psychiatry, and Dale Ulrich sand Leach Ketcheson from the School of Kinesiology, supported by an interdisciplinary MCubed grant from the University of Michigan. Various prototypes are currently being piloted, through involvement with local centers working with children with autism, to measure the development of skills in grading of movement and identification of opportunities for social interactions.

Nexus of sensorial, movement and social function

This research explores the interconnection between the domains of movement, social function and communication. Touch, a primary method for rudimentary nonverbal communication, involves the whole of the somatosensory system to produce the range and nuances for interpersonal interaction. Gestures and facial expressions function via feedback from stretch receptors of the skin and muscles in the hands and arms. Where abnormalities in the somatosensory system exist, often common for children with autism, there is a correlation with reduced abilities for social attention and impairments in nonverbal communication (Foss-Feig et al. 2012). Children who experience limitations in motor skills are shown to have fewer opportunities for social interaction with peers, correlating with lower levels of physical activity (MacDonald et al. 2014). In comparison with children having speech-language impairments or learning disabilities, those with autism are approximately 50% less likely to be invited to social activities and 450% more likely to never see friends (Shattuck et al. 2011).

sensory[STRUCTURE] | stretch[SOFTWARE]

The prototypes developed in this research are designed with the two primary and interchangeable components: (a) the textile-based STRUCTURE as a two-dimensional surface or a three-dimensional environment, and (b) the SOFTWARE which drives the sensing of touch and pressure through the Microsoft Kinect and produces the visual and auditory interface developed through Unity.

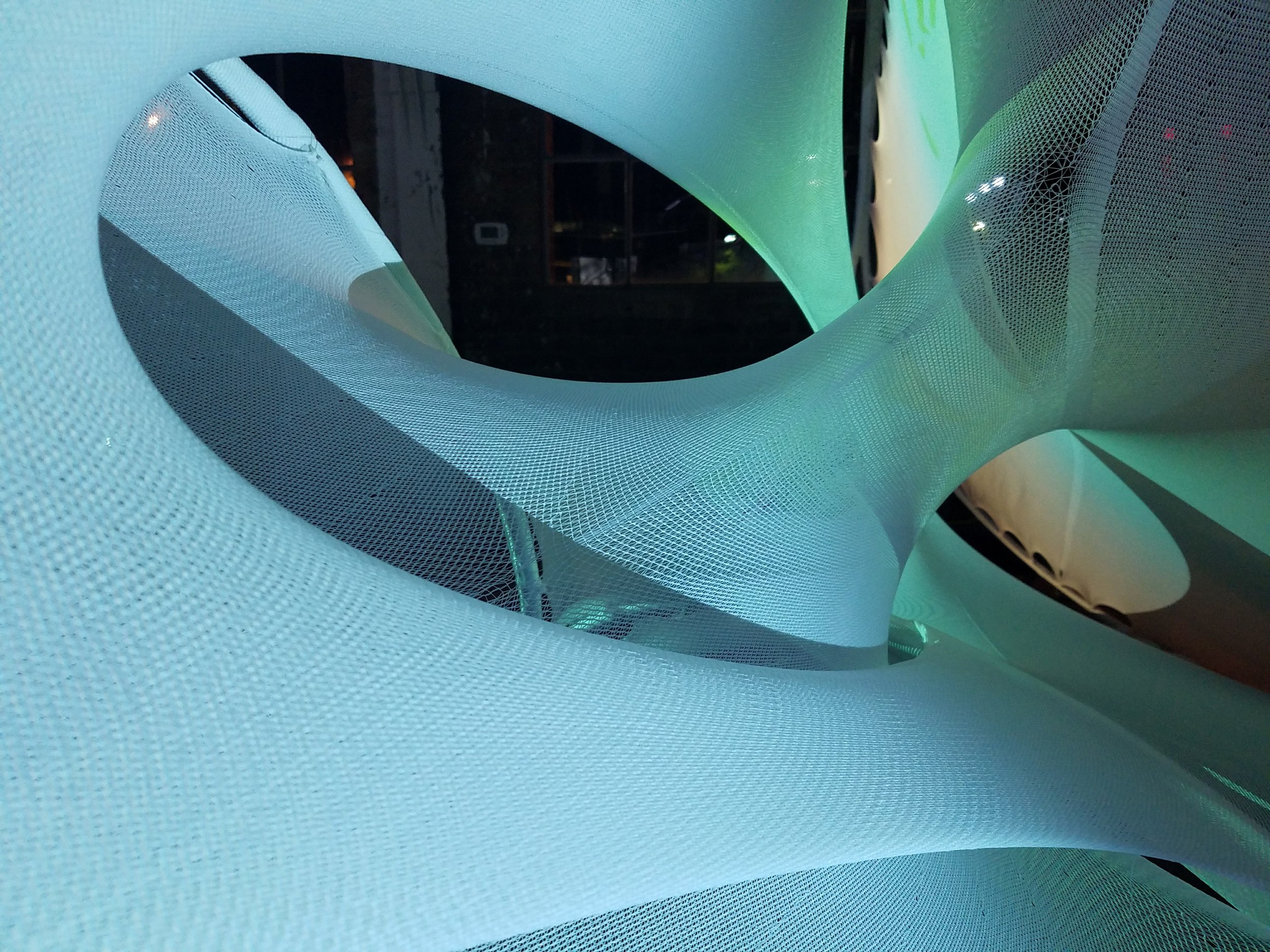

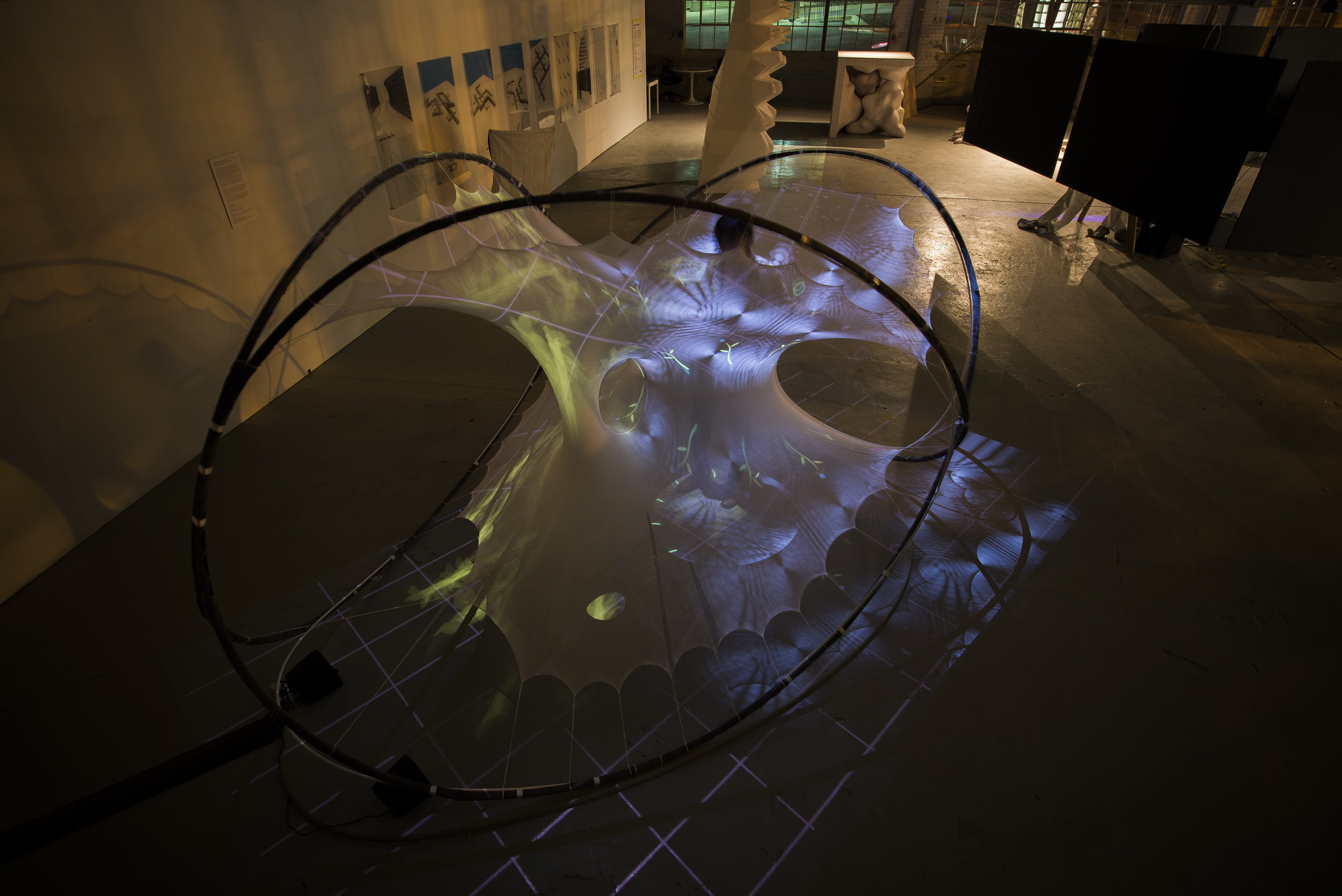

The three-dimensional environments are generated through Prof. Ahlquist's research in textile-hybrid structures. These are tent-like systems formed through the interaction of textiles, stretched in tension, and glass-fiber reinforced rods, stressed through bending. A key facet, in this research, for creating these structures is the use of industrial computer-numerically-controlled (CNC) knitting technology. This enables the design and production of large-scale seamless textiles that serve to structure the prototypes, form fluid spatial landscapes for play and produce the elastic, tactile interface.

The software, developed in the programming environment Unity, embeds the visual and auditory interactivity through the use of projection, sensing captured with the Microsoft Kinect, and the design of custom interfaces. The depth-mapping capabilities of the Kinect are utilized to identify moments where the geometry of the surfaces is altered based upon someone interacting with the textiles. This functions as a stand-alone algorithm that is scalable in terms of working equally on a planar surface or a complex 3D landscape. Therefore, the myriad of interfaces being developed can be prototyped on any configuration of textile surfaces. This enables an examination of which modes of interaction are focused towards developing skills in motor planning or more amenable to social play.

sensorySURFACE

The sensorySURFACE | stretchCOLOR prototype focuses on developing skills in fine motor control, particularly the ability to grade movement. Grading of movement is a part of the proprioceptive sense which processes information to both understand the position of limbs and body in space and dictate appropriate movement based upon particular stimuli. Dysfunction in proprioception relates to improper processing of information received through muscles, skin, and joints, accompanied by similar issues related to the tactile sense (Kranowitz, 2005). Relating to Ara's particular profile, she is defined as a "sensory seeker." This results in the need for deep pressure applied at the joints along with more significant skin contact in order to register and trigger a proprioceptive response. Consequently, the proprioceptive response is quite crude, meaning the amount of movement or fine motor control will be inappropriate (either too much or too little) for a particular task.

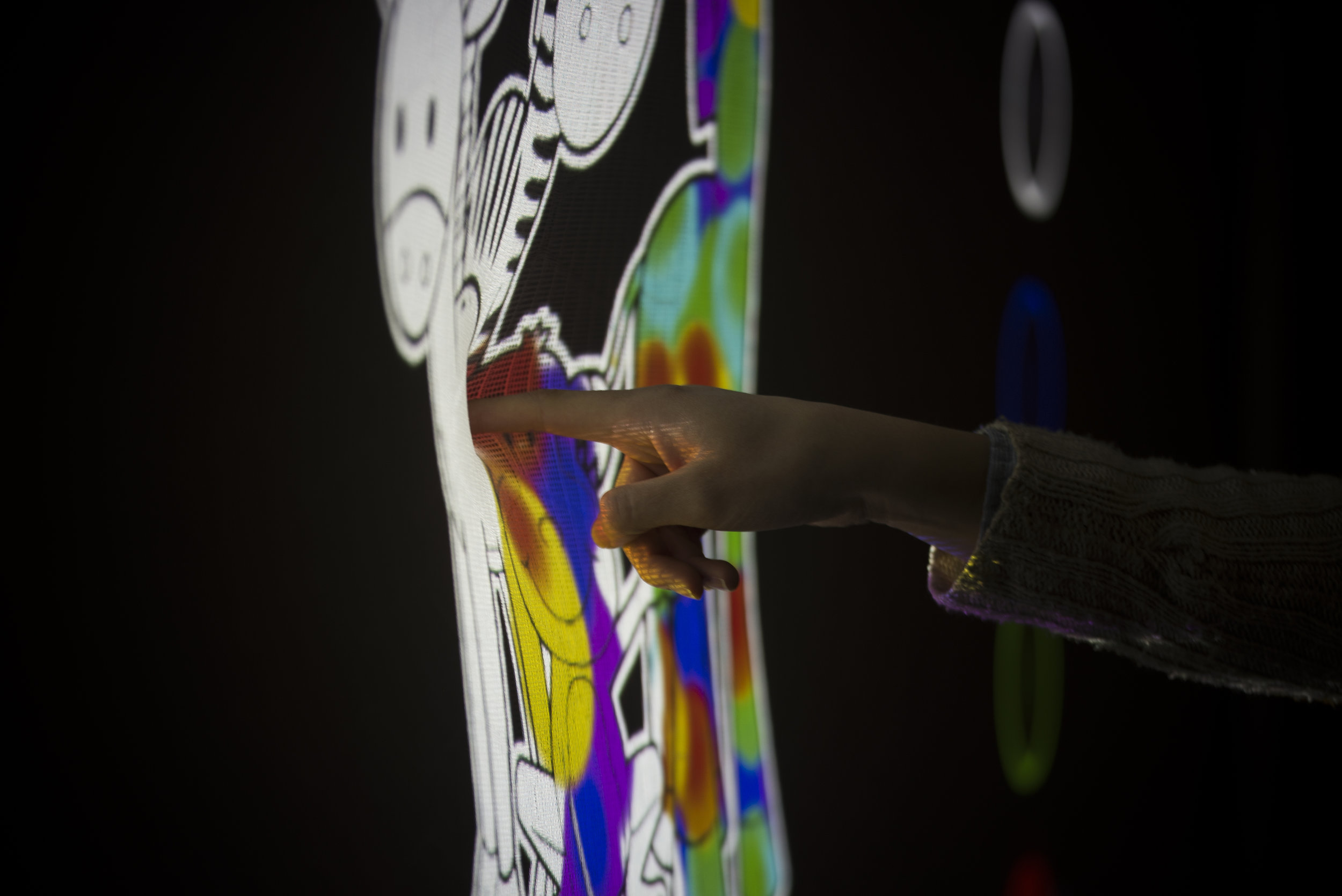

The elasticity in relation to touch and pressure is a key criterion for the design of the textile and visual responsiveness in the stretchCOLOR software. Strong resistance in the textile triggers pressure in the joints providing a better chance for the tactility to be identified, subsequently also providing a calming effect (Grandin, 1992). The visual serves to reiterate and magnify the tactile experience. The color being projected onto the textile surface at the location of touch, is an indicator for the amount of pressure being applied. A deeper touch shifts the color output. Holding at a specific depth triggers the color swatch to grow, rewarding patient, controlled movement with the ability to color a large portion of the canvas with only a single touch.

sensoryPLAYSCAPE

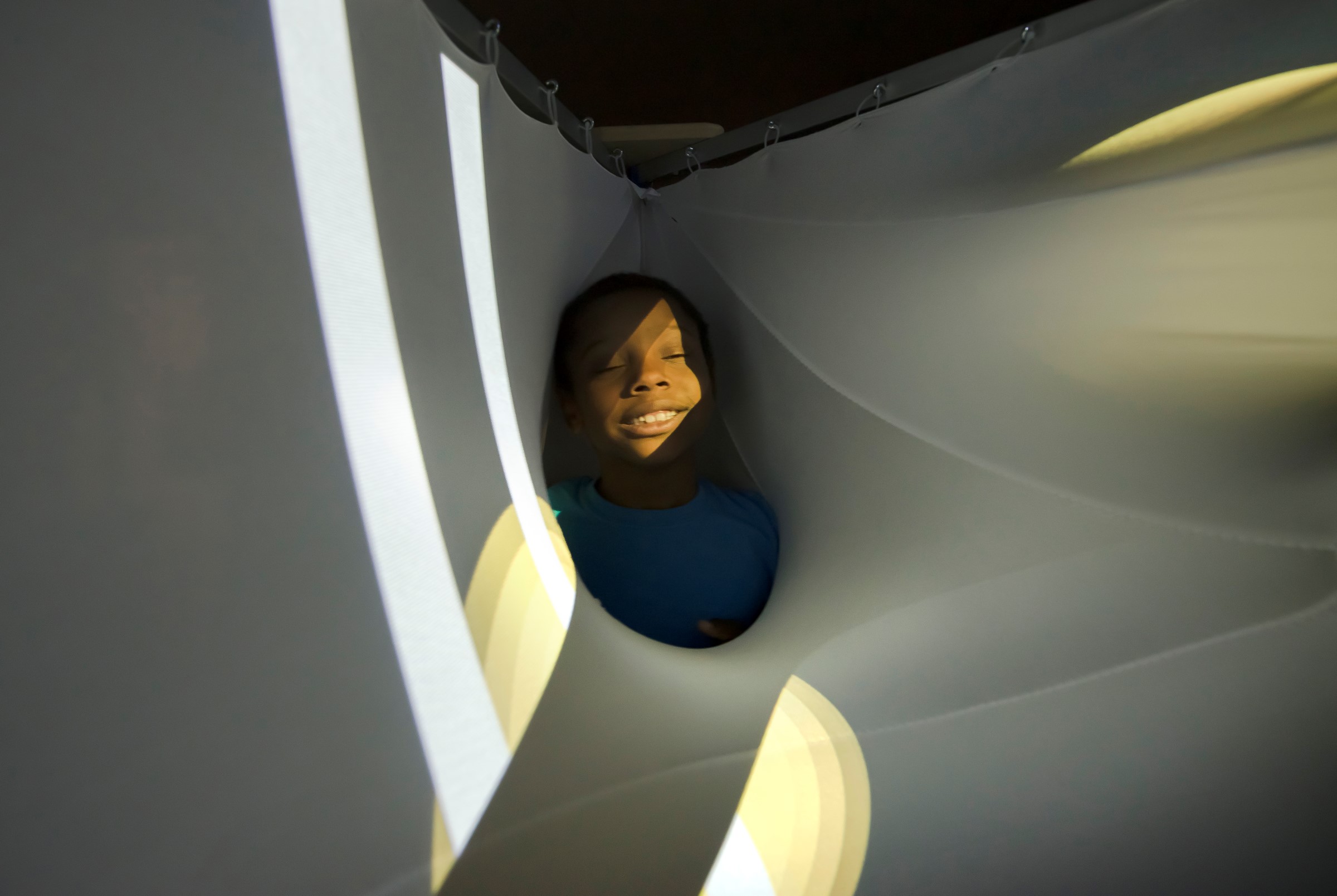

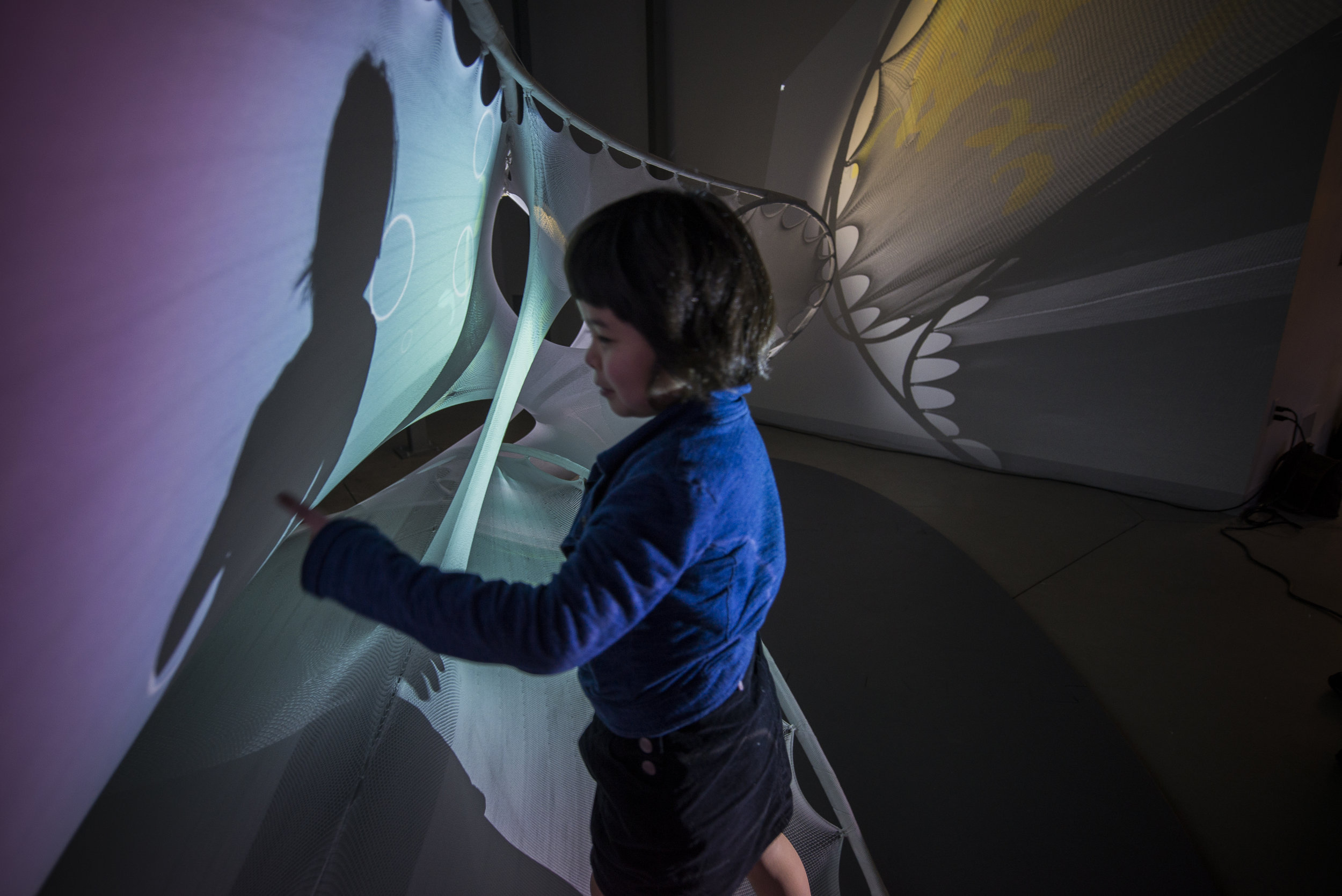

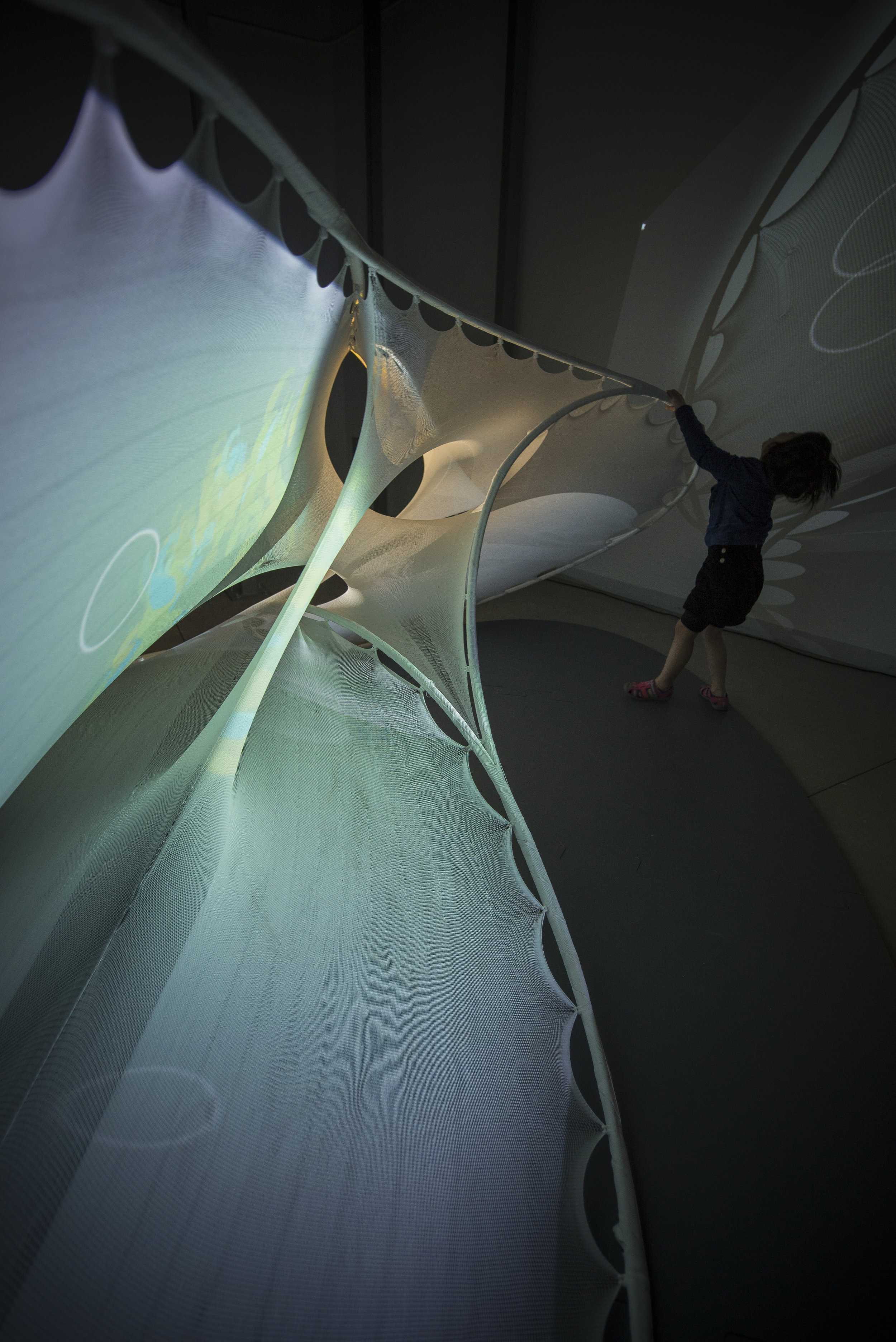

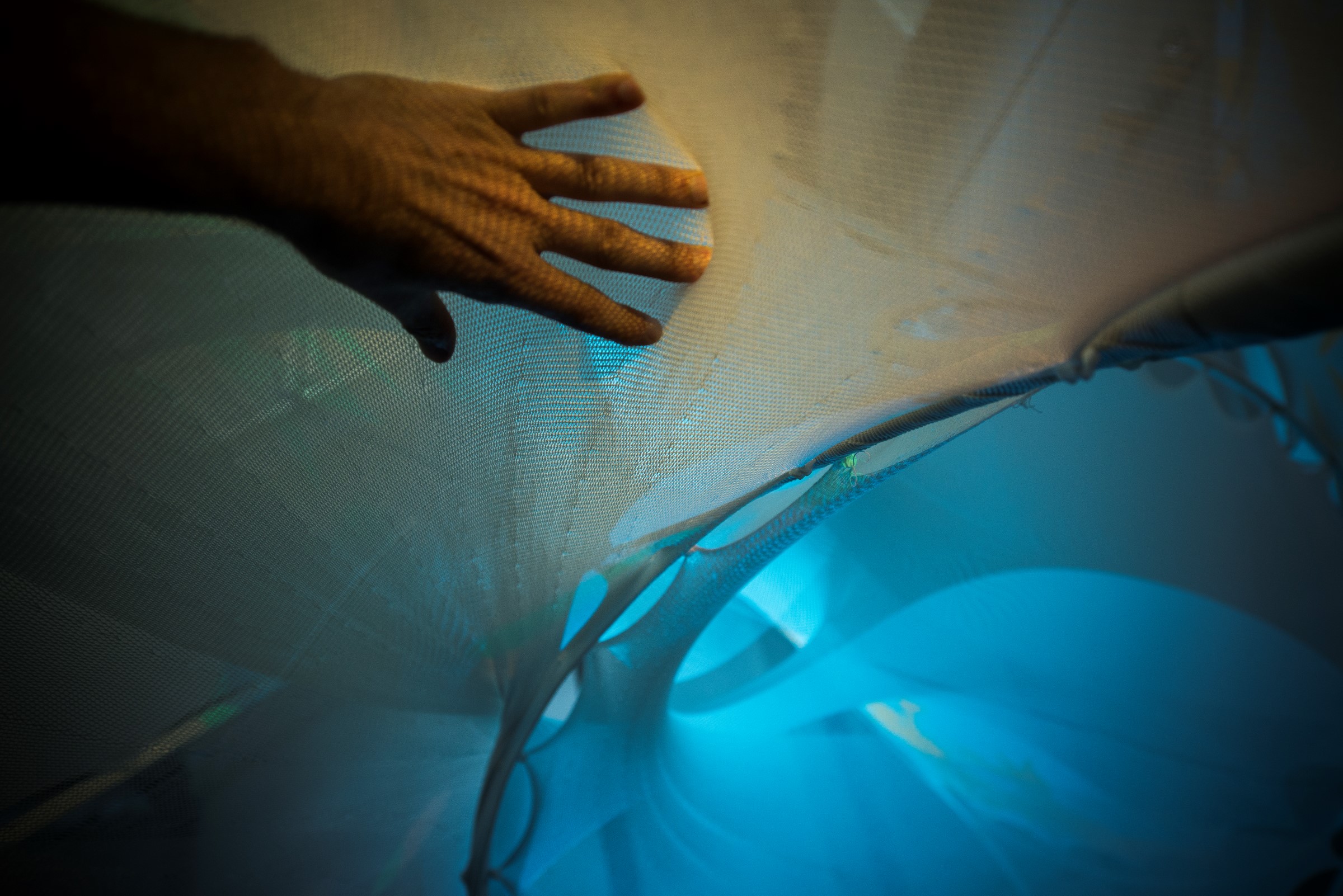

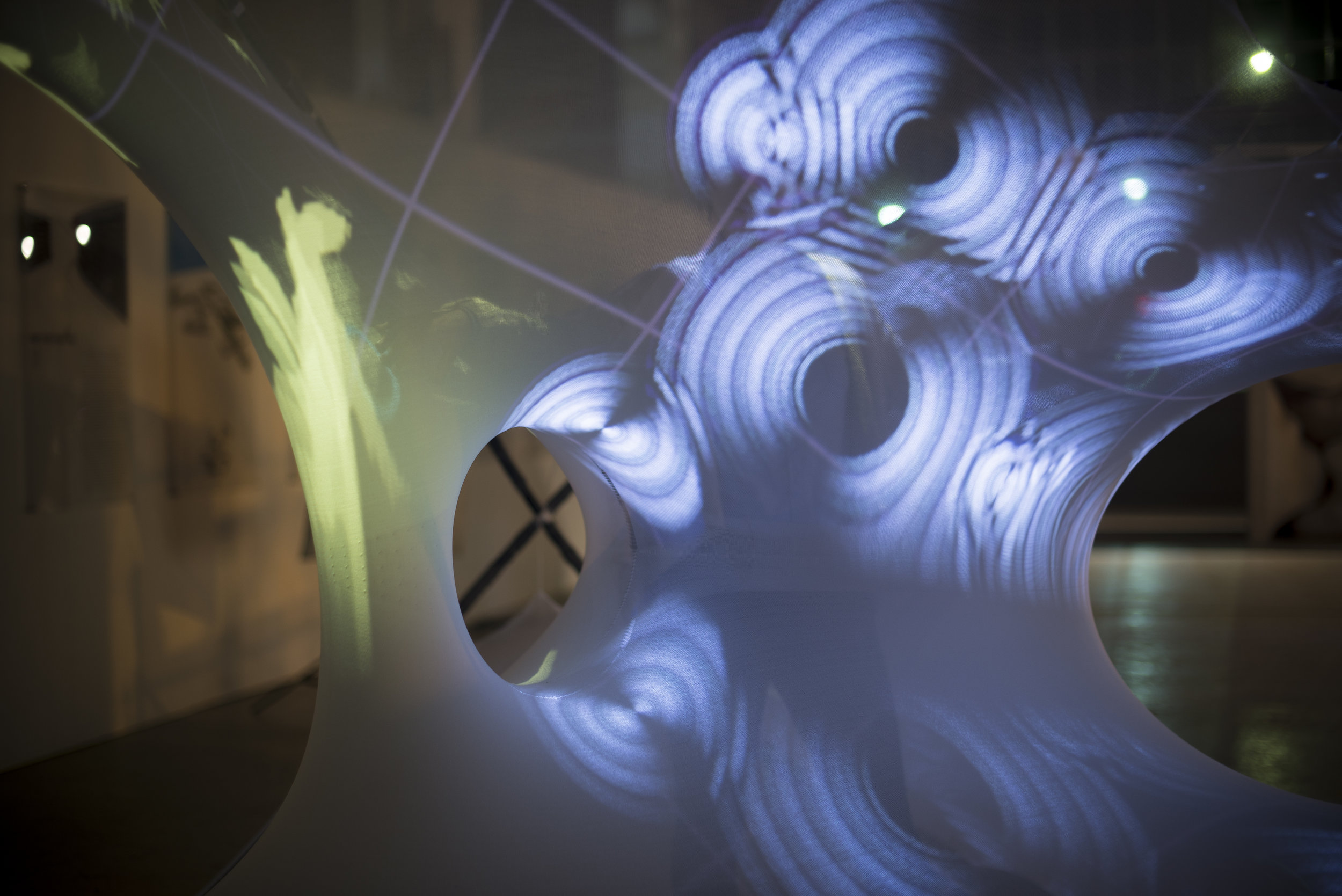

Variable experiences of tactility and movement are the primary design drivers in this research, seeking to encourage and positively reinforce moments of social interaction. With the sensoryPLAYSCAPE prototype in particular, textile elasticity and spatial organisation defines responsiveness at multiple scales and intensities. The prototype elicits three primary modes of interaction. First, physical exploration, or gross motor movement, is driven by the seamless interconnection of contoured surficial and volumetric geometries. Second, responsiveness to fine motor movements exists in the elastic forgiveness of the knitted textile, a consequence of yarn quality, stitch structure and the distribution of tensile forces. This is expressed in the balance of stretch and resistance at the touch of the hand, or in the encompassing contact between body and textile within the volumetric spaces. Lastly, movement and tactile sensation are augmented with visual and auditory feedback. Like the sensorySURFACE prototype, the multi-sensory feedback seeks to magnify tactile and proprioceptive sensation and, subsequently, positively reinforce specific actions.

The stretchANIMATE software triggers animations to play across the structure when the textile surfaces are touched at key locations. With the trigger points initially hidden, grand movements are encouraged. Moving across the entirety of the structure in search of the triggers subsequently helps provide more sustained tactile sensation. Alternatively, certain animations are activated through simultaneously touching two trigger points. Such points are positioned far enough apart in order to require two individuals to communicate and coordinate their interaction. The stretchSWARM works with the more dynamic movement of objects around points of engagement with the textile. A brief touch disturbs a swarming school of fish, while a long touch drives them to attract and swarm around the point of interaction. A deeper touch expands the radius of the swarming activity, responding to children's interest in climbing into the structure and using their entire body to define the point of interaction.

Sensorial Architecture

This on-going research provides the foundation for an architecture which sets the sensorial experience as the primary performative constraint by which material, spatial, visual and sonic landscapes are instrumentalized. Yet, perception of space and time, in its social and environmental constituents, is largely atypical for children with autism. In response, those that engage the architecture are given considerable agency to actively and dynamically articulate the material and immaterial natures. Performance of the prototype is defined by the measured understanding of the physiological and sociological human behaviors that occurs within it. The manner in which the architecture is transformed communicates the individualized nature of the socio-sensorial experience.

Ara, the daughter of Prof. Ahlquist, is the initial motivator for the research, examining autism, sensory experience and social function.

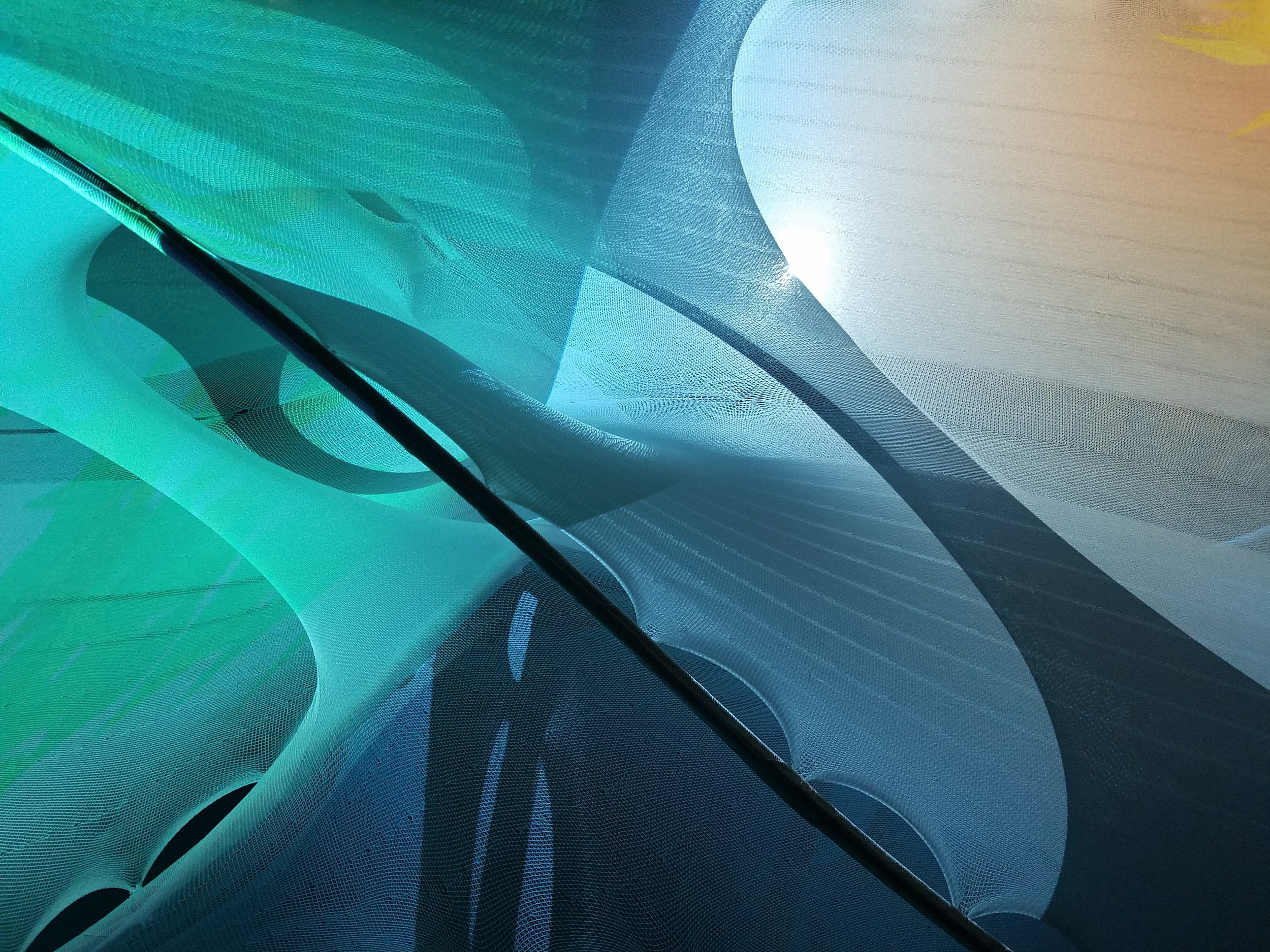

Sensory[STRUCTURE} - Textile-hybrid structure formed of CNC knitted textiles interconnected with glass-fiber reinforced polymer (GFRP) rods.

Sensory[SOFTWARE] - Framework for the sensory architecture prototypes, interconnecting textile structures with the Microsoft Kinect and bespoke software programmed in Unity.

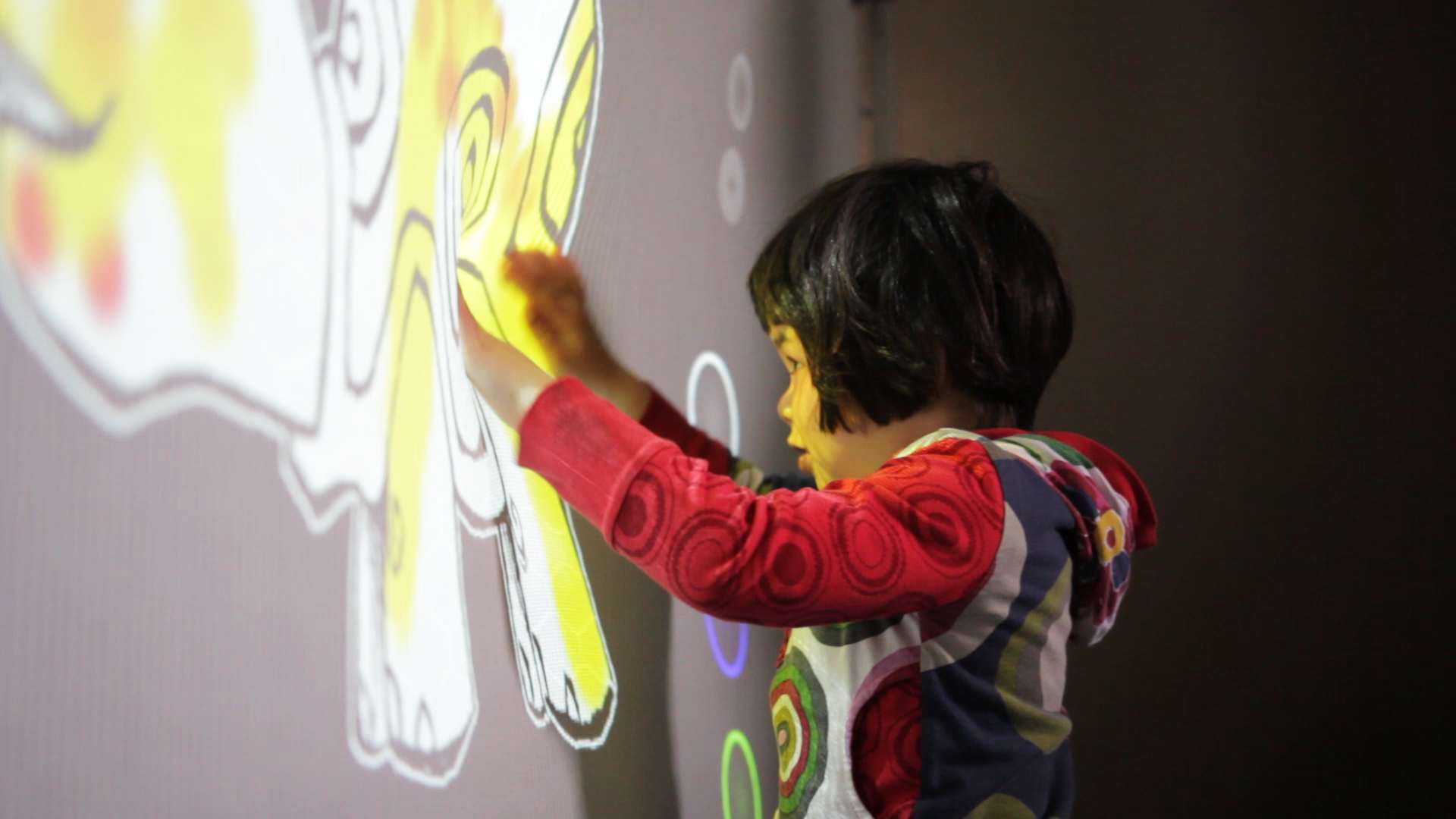

The sensorySURFACE | stretchCOLOR prototype, installed at the Spectrum Therapy Center in Ann Arbor.

Ara playing with the sensorySURFACE | stretchCOLOR prototype, where pressure applied to the 2D textile determines the color being projected onto the surface.

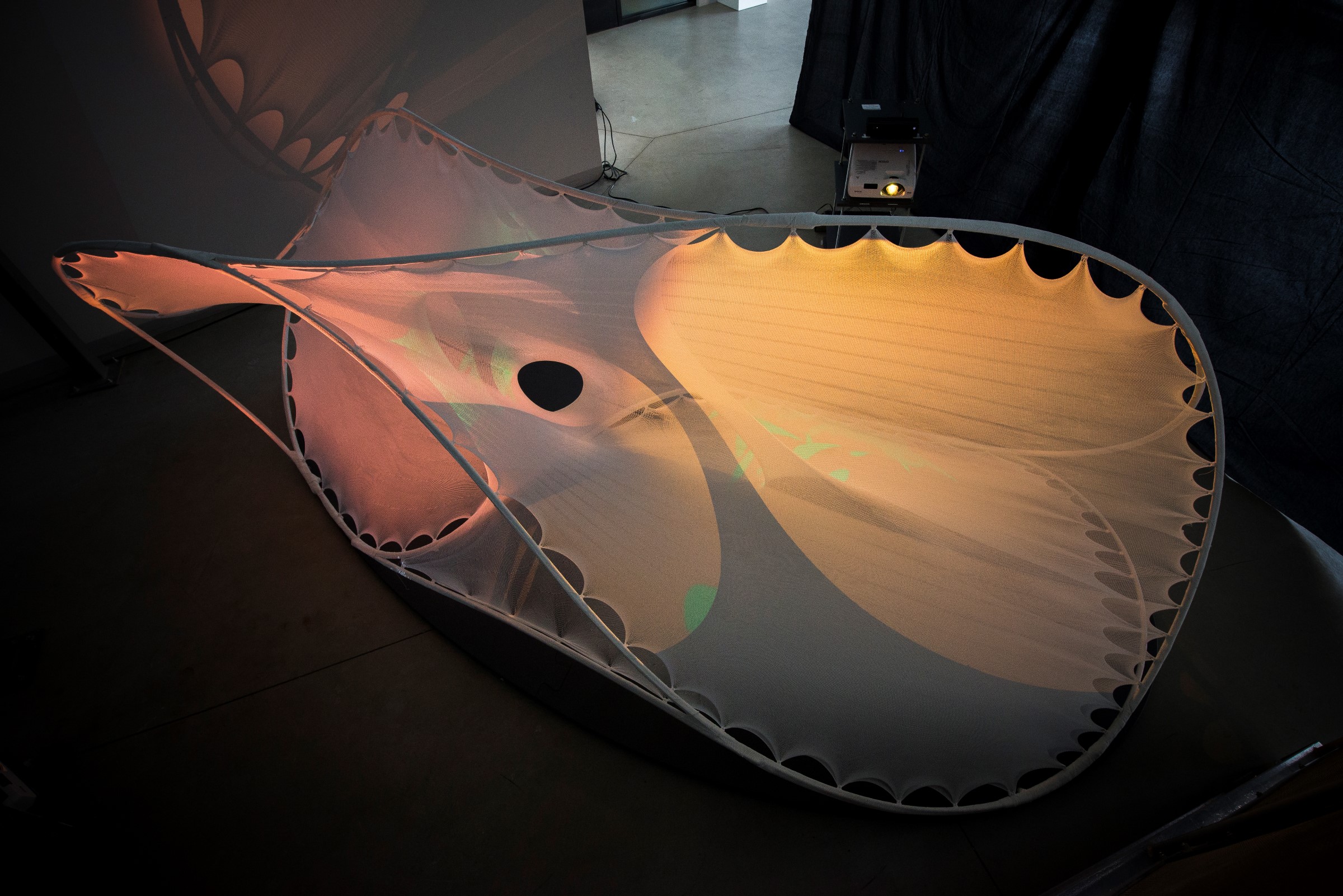

sensoryPLAYSCAPE (v1.0) textile hybrid prototype.

Touching the textile at key locations triggers animations for play across the structure of the sensoryPLAYSCAPE | stretchANIMATE prototype.

sensoryPLAYSCAPE (v2.0) textile hybrid prototype.

Ara playing with the sensoryPLAYSCAPE | stretchSWARM prototype which combines textile-hybrid technology with an interface for interacting with swarms of fish.

stretchSWARM software utilize with the sensoryPLAYSCAPE structure, where a long touch with the surface creates an attractor for a dynamic swarm of fish.

CREDITS

PROJECT: Tactile interfaces and environments for developing motor skills and social interaction in children with autism

Funding: University of Michigan, MCubed Interdisciplinary Seed Grant

Dates: 2015-current

Principal Investigators

Sean Ahlquist, Assistant Professor, University of Michigan - Architecture

Costanza Colombi, Research Assistant Professor, University of Michigan - Psychiatry

Dale Ulrich, Professor, University of Michigan - Kinesiology

Leah Ketcheson, Post-doctoral Student, University of Michigan - Kinesiology

Researchers

Architecture: Oliver Popadich, Shahida Sharmin, Adam Wang

Kinesiology: Erin Almony, Erika Goodman

Collaborators

Mark Burke, Director, Spectrum Therapy Center, Ann Arbor

Julian Lienhard, Structural Engineer, str.ucture, Germany

Onna Solomon, Director of Learning and Development, PLAY Project, Ann Arbor

PROJECT: Social Sensory Surfaces: Physical Computing, Tactile (Textile) Interfaces and Collaborative Tools for Children with Autism Spectrum Disorder

Funding: University of Michigan, Taubman College of Architecture and Urban Planning, Research Through Making Grant

Dates: 2014-15

Principal Investigators

Sean Ahlquist, Assistant Professor, University of Michigan - Architecture

Sile O'Modhrain, Associate Professor, University of Michigan - School of Music and School of Information

David Chesney, Senior Lecturer, University of Michigan - Computer Science

Researchers

Architecture: Taylor Boes, Evan Buetsch, Karen Duan, Patty Hazle, Yu‐Jen Lin, Henry Peters, Jason Chao‐Chung Yang

Computer Science: Evan Cann

Collaborators

Peter von Buelow, Structural Engineer, University of Michigan

Mary Burke, Director, Spectrum Therapy Center

Joshua Plavnick, Assistant Professor of Special Education, Michigan State University

Tabitha Wisecup, Assistant Director, Spectrum Therapy Center

![sensoryPLAYSCAPE[2] Technology-Embedded Textile Hybrid Prototype](https://images.squarespace-cdn.com/content/v1/5803cb362e69cf75b4a35fb0/1479922050671-MURNAUGX05TVXL9N7CZI/playscape_title.jpg)

![sensoryPLAYSCAPE[1] Technology-embedded Textile Hybrid Prototype](https://images.squarespace-cdn.com/content/v1/5803cb362e69cf75b4a35fb0/1479927991271-N81BW9D29K6L8PDDI2KV/playscape1_title.jpg)